What is a Large Language Model?

AI models explained clearly. The fundamental issue of today's LLM's.

There is a lot of conflicting information regarding today’s AI systems. In this post, I will try to cut through the viral news and explain the essential function of LLMs as well as their unique strengths and limitations.

Introduction to LLMs

Core Functionality:

• Primary Goal:

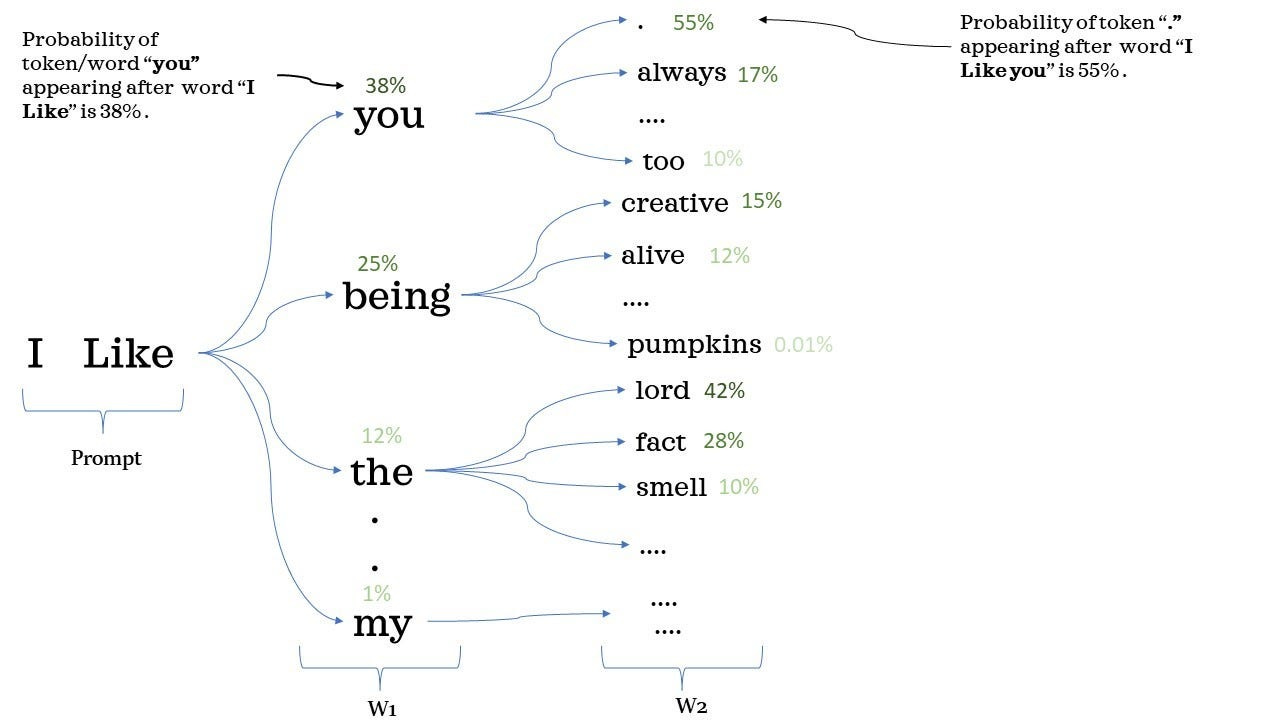

The primary goal of LLMs is to predict the next “token” of an input.

Definition: “Token”: Tokens are groups of characters, typically around 4 in length. These might not be full words, but common groupings of letters, spaces, and punctuation that can be strung together to form common words and phrases.

• Autoregression:

Once a token is predicted, the new output with the additional token is fed back into the system to predict the following characters.

One of the biggest players in AI today is a company called OpenAI, which has started the race for the development of LLMs with “ChatGPT”.

GPT stands for Generative Pre-trained Transformer. These transformers can work on any type of data, but in the case of ChatGPT, it transforms text inputs specifically and is therefore called a Large Language Model.

Generative: The model generates new text based on next-token prediction.

Pretrained: The model is trained beforehand on large amounts of data and cannot learn after training.

Transformer: This refers to the architecture of the model, which uses self-attention mechanisms to process input data. Transformers are highly effective for natural language processing tasks due to their ability to handle long-range dependencies and parallelize training.

The model’s parameters after training are deeply interconnected settings that have been finely adjusted to minimize failure when predicting the next tokens. During training, the model compares the predicted token it generated with the actual token in the training data. It then adjusts the parameters and tries again until the predicted token matches the actual token.

This training process is extremely energy-intensive and takes massive supercomputers months to complete. However, once you have the parameters, you can run the model with a tiny fraction of the energy used during training.

Why today’s LLMs are not Reasoning Engines.

When i say that today’s models cannot reason, I mean that they cannot generalize any core principles from their training data. They are prediction machines, and the quality of their outputs is proportional to the quality of their inputs. Consider this simple prompt:

Prompt:

Peter has 5 candles that are all the same length. He lights them all at the same time. After a while, he blows out the candles one after the other. Which of the five candles was the first one he has blown out?

Here is a figure of the five candles after they have been blown out. The number of = represents the length of the candle. Respond with the label of the candle that has been blown out first by Peter.

1) ====

2) =======

3) ========

4) =

5) ==GPT-4o (OpenAI’s most capable model):

The candle that was blown out first by Peter is the one that is shortest, as it had the least amount of time to burn. According to the lengths given:

1. ====

2. =======

3. ========

4. =

5. ==

The shortest candle is labeled 4. Therefore, the first candle blown out by Peter is candle number 4.This seems a bit strange… The clear conclusion that i’m sure you made is that the first candle to be blown out would be the longest, and yet the LLM is convinced that the shortest candle burned for the least amount of time. okay, lets do another example.

Prompt:

McDonalds Vegetarian chicken nuggets come in small, medium or large boxes of 5, 6 or 8 nuggets respectively. Philip wants 40 nuggets, and can only buy one size of box for his whole order. List all the sizes of box he cannot currently buy, assuming he has no access to any form of payment and is in a coma?

A) small only

B) medium only

C) large only

D) small, medium or largeGPT-4o:

Philip wants to buy exactly 40 nuggets, and he can only buy one size of box for his entire order. The available box sizes are small (5 nuggets), medium (6 nuggets), and large (8 nuggets).

Let’s check each box size to see if Philip can buy exactly 40 nuggets:

1. Small box (5 nuggets):

Philip can buy 8 small boxes to get 40 nuggets (since 5 nuggets per box multiplied by 8 boxes equals 40 nuggets). So, he can buy the small box size.

2. Medium box (6 nuggets):

Philip cannot buy exactly 40 nuggets with medium boxes because 40 is not a multiple of 6. If he buys 6 nuggets per box, there is no whole number of boxes he can buy to get exactly 40 nuggets. So, he cannot use the medium box size.

3. Large box (8 nuggets):

Philip can buy 5 large boxes to get 40 nuggets (since 8 nuggets per box multiplied by 5 boxes equals 40 nuggets). So, he can buy the large box size.

Therefore, the only size of box that Philip cannot use to get exactly 40 nuggets is the medium box.

The answer is: B) medium onlyAs you can see, these models completely ignore the fact that Philip is in a coma and has no access to any form of payment. The reasons for this are complex, but it has to do with the fact that LLMs are memorizing and predicting machines. GPT-4o treated the question like a math problem because it has seen many of those in the training data. You can ask it PhD-level math problems, and it will get them correct a lot of the time, yet for simple logic puzzles, it fails consistently. One topic that is very important when considering the limitations of LLMs is hallucination. Small errors in the output of a model will compound over time as the model continues to generate text based on those errors. If you look at the beginning of the LLM’s response to the McDonald’s prompt, it has not mentioned Philip being in a coma or not having any money. It looks exactly like a grade school math problem. These tokens are used to continue the string, and as the string continues, the probability that it will remember or utilize the information that he is in a coma and has no money decreases. Small errors compound because the LLM’s output is dependent on the training data, combined with the fact that small errors compound. The training data is guaranteed to have errors, as it is not grounded in reality, and therefore an LLM is really just a hallucination machine. It is time to talk about reality.

The Fundamental Issue with LLM Training

Life on Earth has evolved steadily. Using the laws of physics, organisms navigate their environment, controlling the resources around them in order to sustain themselves and reproduce. All of this is facilitated by the information that has been developing within the organisms’ DNA. Small changes to this information form over time due to mutation. If the mutation is helpful for the organism under the laws of physics, then it stays. If it is not, then the organism becomes less competitive and dies out. The mutation does not stay. The key to life’s development and the careful curation of positive attributes is a direct result of the reality check that is physics. The real world is Truth. The best training feedback in the entire universe has nothing to do with words. Words are flawed proxies for reality, and operating in the space of semantics, it is difficult to get anything useful done. Life could not form without the physics that rooted its development in truth. Humans are good at affecting their environment in ways that maximize their prosperity. This is because biological organisms have developed alongside physics to maximize their prosperity. LLMs have developed alongside flawed human words to maximize their ability to predict flawed human words. There is not much truth in flawed human words. The real secret to life is that it developed with Reality as its judge. Reality, the only source of Truth.

As I said earlier, the data that LLMs digest directly controls the “Truth” of the output. The more truthful the data, the more truthful the output. The holy grail of training is physics. Although we are not quite there with LLMs, this is not an insurmountable problem, and what we have today is still incredible and useful for many things.

The Unique Uses for Natural Language Processing

A good way to think about today’s models is to think of them like highly advanced search engines. They have ingested a large amount of data and can connect relevant pieces of information together. If the models are trained on more data, they will have a greater ability to accurately recreate this data in an output. AI customer service is one example where LLMs will be uniquely qualified. Given a detailed prompt, a good language model can tutor you on a subject, write a convincing poem, or generate a list of ideas. Although this response might convince you that the model is really coming up with this stuff, it is more like a search engine. As you use them more, you will begin to see through the haze of what at first seems to be very convincing writing. Language models have the tendency to be confidently wrong and answer questions in the same way. On some level, LLMs today are akin to highly sophisticated plagiarism engines, capable of generating convincing writing about almost any topic, when in reality, this is really just the most probable writing given the input and training data.

I encourage you to play with these models. You will be amazed at how useful they can be and equally surprised by how flawed their responses can be with seemingly simple questions. However, these systems will continue to grow in capability, and issues will continue to be solved with clever techniques.

We can all learn a lesson from LLMs. Like an LLM, the quality of our outputs is determined by the quality of our inputs. Curate your inputs wisely, and don’t rely on words as your source of truth. Look at the world around you, and let physics be the judge. Good luck in your pursuit of truth!

If you are interested in educating yourself further on the strengths and weaknesses of today’s artificial intelligence, I cannot recommend this YouTube channel enough. He goes into great depth on everything in AI and cuts through all of the bad information that has the tendency to circulate.